Attention-based Convolutional Neural Network for Music Genre and Mood Classification

Intro

In recent years, the interest of Music Information Retrieval based on Deep learning has drastically increased while traditional MIR techniques remain difficult, non-universal and sometimes proprietary. This project aims to further the line of researches by predicting the genre and valence mood of the audio simultaneously by suing a multi-output CNNs to learn the features of mel spectrograms generated from the audio.

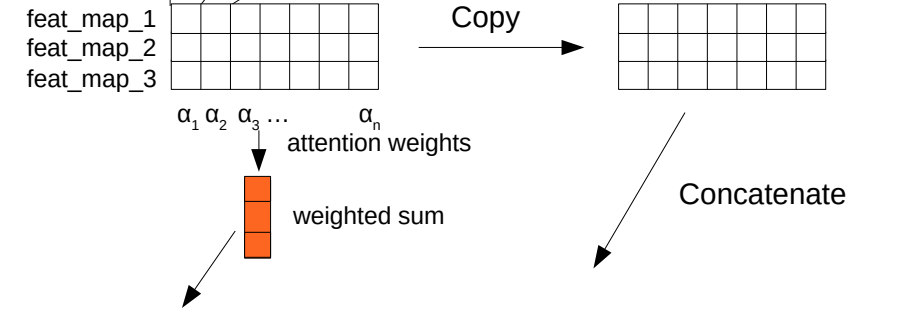

Notably, attention mechanism is applied in combination with CNN to extract the features of audio samples. The reasons to add attention mechanism for music genre classification is that the genre of music is discriminative by its signal spectral characteristics and audio samples from the same genre should share some similar patterns or distributions where different parts of the signal should be given different weights intuitively. In order to improve accuracy, attention mechanism could be applied to the extracted features from convolutional layers, through which the features distribution could be better learned. Therefore, with the additional parallel attention layer, weights to features from different feature maps could be assigned and then for each feature map, weighted sum are then calculated. The structure is shown below. For detailed model architexture, please refer to paper.

The raw data is collected from FreeMusicArchives(FMA) and the valence metric is queried from Spotify API.

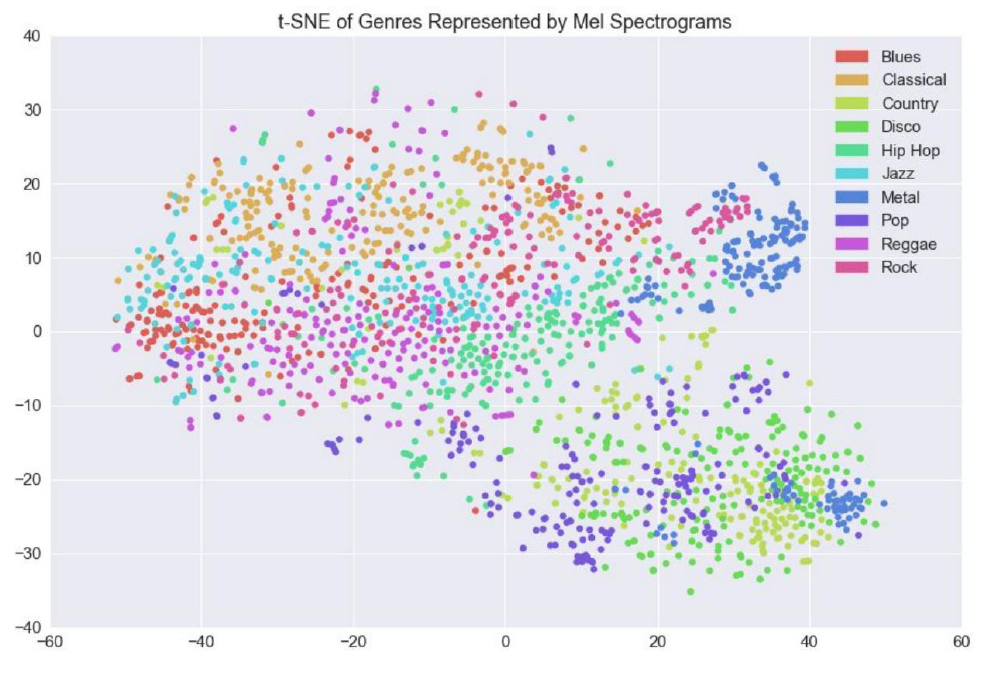

The dimensionality of the data is reduced by t-SNE and the distribution has been visualized below.

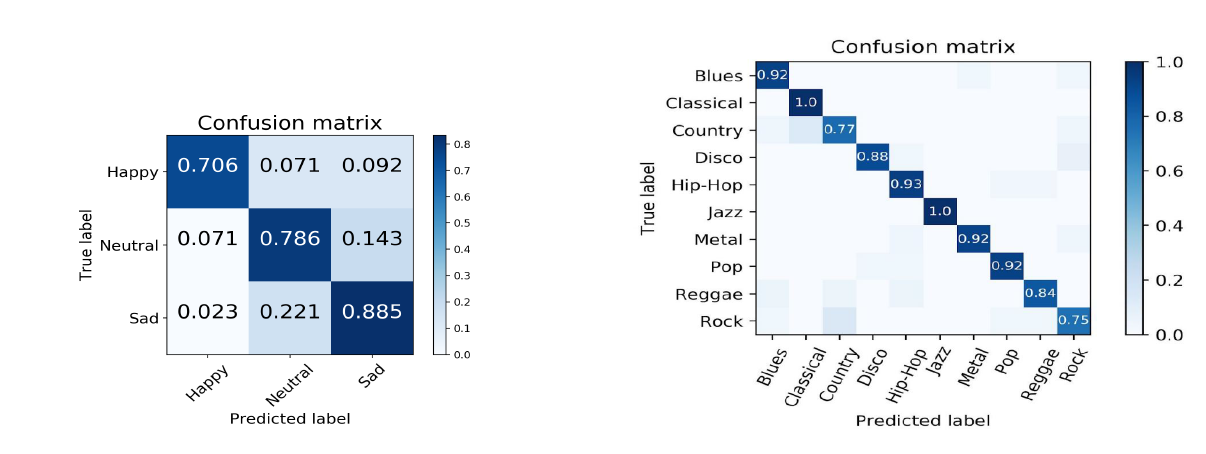

Results

The best performance reaches around 92% on test set for each genre and mood. Please refer to the paper for more details.